LabVIEW Proxy: Optimize GPU resources to run multiple AI models

LabVIEW application can't release the GPU resource until it is shut down completely. Therefore, you must start a new LabVIEW session each time you want to load other AI model.

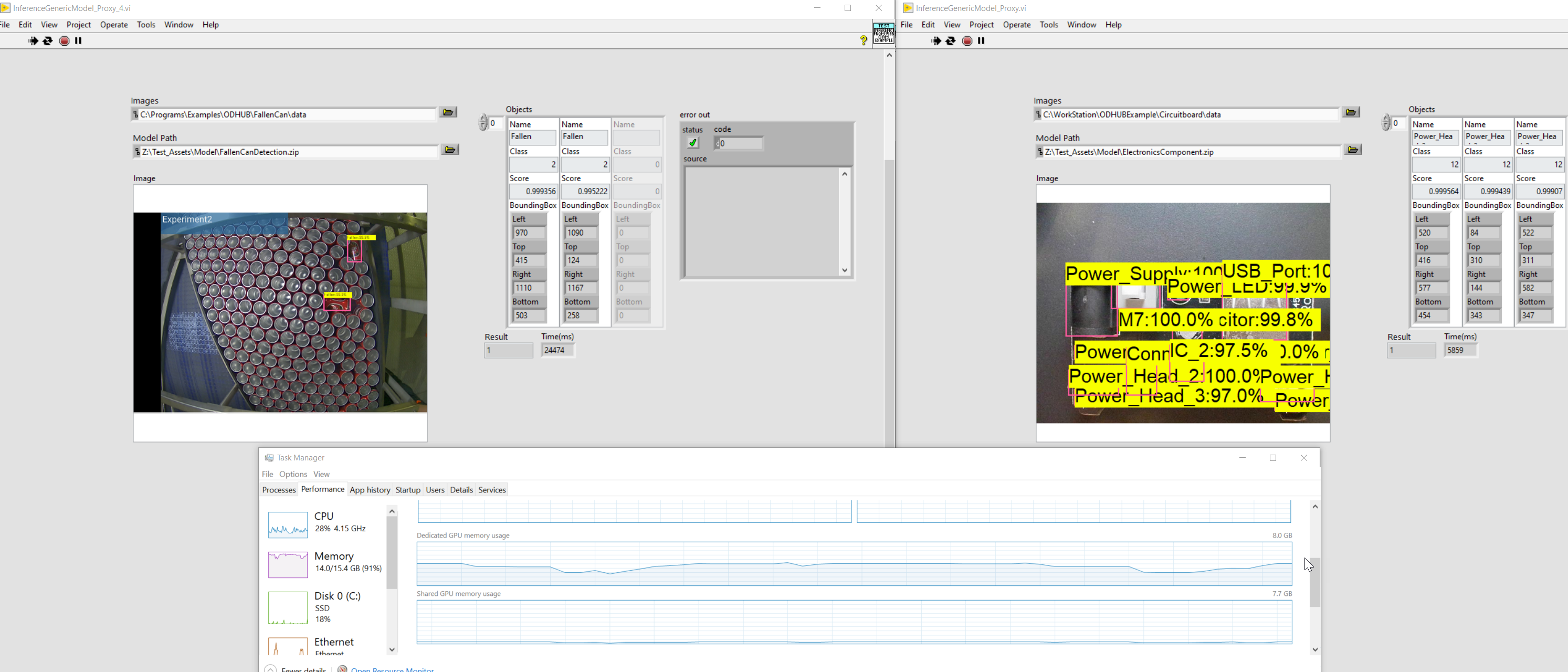

In addition, running multiple AI models simultaneously is impossible with native APIs because LabVIEW will consume all GPU resource for running just a single model.

That's why ANSCenter provides users with additional Proxy library that runs in multiple threads in standard application mode, creating multiple instants that can manage their own GPU resources on different processes, allowing multiple AI models to run simultaneously in LabVIEW.

Here's how you can install and use the Proxy lib:

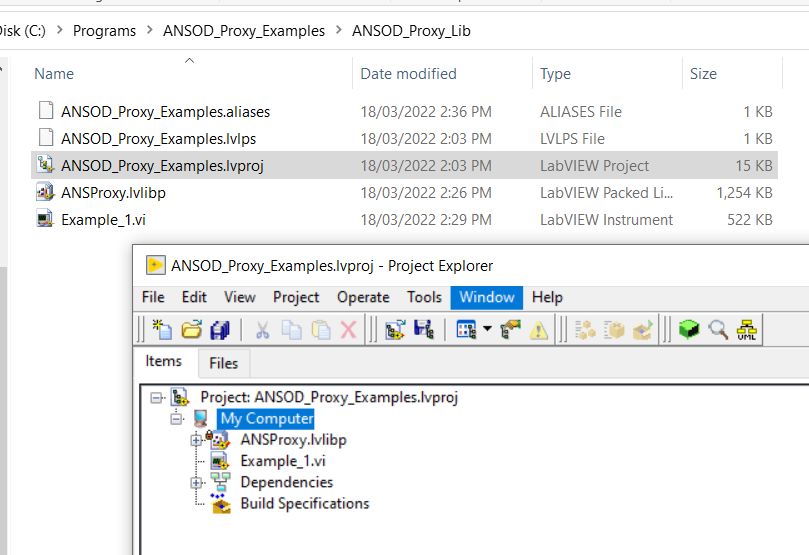

1. Download and unzip the proxy lib (noted that ODHUB-LV v2.0.0.8 or later must be installed before this step)

2. Open Example_1.vi:

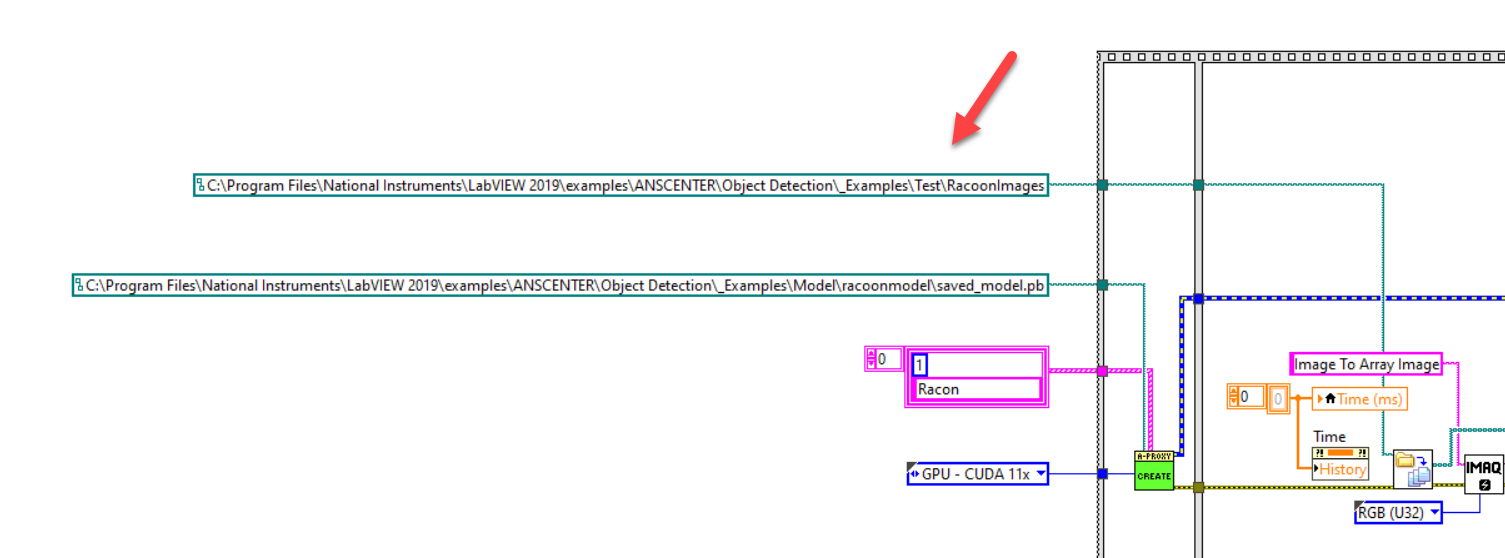

- Make sure the model path and data path match your current LabVIEW version (for example mine is LabVIEW 2020, so I must change the path to LabVIEW 2020).

- Make sure you choose the compatible CUDA at Create.vi.

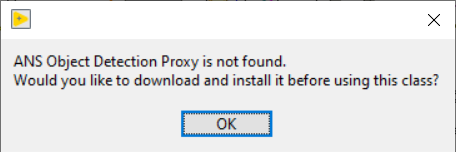

3. Run the example, since your PC does not have the proxy, a dialog will appear asking you to download it

Download and install the proxy lib then restart your PC

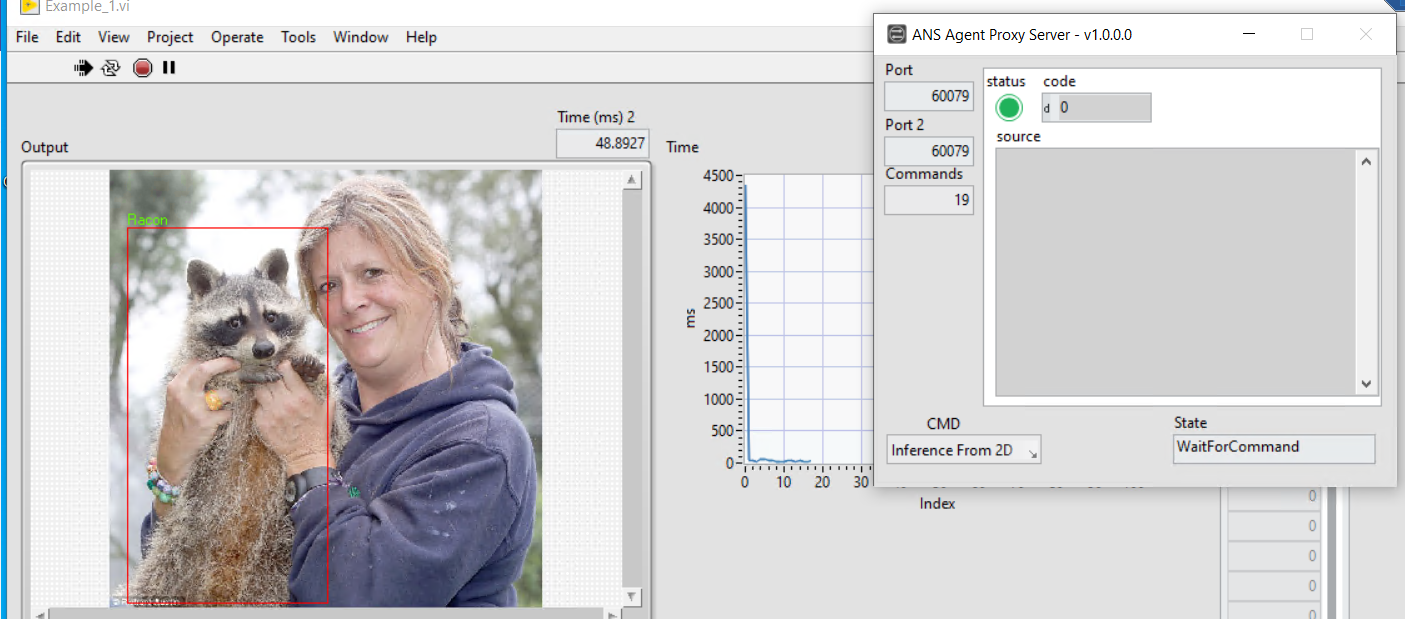

4. Return and run the example.vi. and you should see the proxy works

Using the proxy should be the same as the native APIs, but it can release GPU resources and allow you to load other models without quitting LabVIEW.

The proxy is designed to run in multi-threaded processes, so you might load several models in parallel if your PC supports.